Chapter 20

Simulation Environments

"The proper study of mankind is the science of design." — Herbert A. Simon

Chapter 20 delves into the pivotal role of simulation environments in reinforcement learning (RL), providing a comprehensive exploration of their mathematical foundations, conceptual frameworks, and practical implementations. Beginning with an introduction to the essential components of RL environments, the chapter systematically builds up from the theoretical underpinnings of Markov Decision Processes (MDPs) to the intricacies of state and action spaces. It then navigates through prominent simulation frameworks such as OpenAI’s Gym and Farama’s Gymnasium, highlighting their core abstractions and APIs. Recognizing the current instability of Rust-based crates for these frameworks, the chapter innovatively bridges Python and Rust through various integration strategies, including Foreign Function Interface (FFI) techniques and inter-process communication (IPC). Practical sections offer hands-on guidance for creating custom environments in both Python and Rust, demonstrating how to leverage Rust’s performance and safety features alongside Python’s extensive ecosystem. A detailed case study exemplifies the construction of a hybrid environment, showcasing the seamless interplay between the two languages. Advanced topics address scalability, distributed environments, and future developments, ensuring that readers are well-equipped to push the boundaries of RL simulations. Throughout, the chapter emphasizes best practices, providing clear code examples, visual aids, and actionable insights to facilitate the reader’s journey from foundational concepts to sophisticated implementations.

20.1. Introduction to Simulation Environments

In the realm of Reinforcement Learning (RL), simulation environments serve as the foundational platforms where agents learn to make decisions and execute actions to achieve specific goals. A simulation environment replicates the dynamics of real-world scenarios in a controlled and risk-free setting, allowing RL agents to interact, learn, and optimize their behaviors without the potential consequences associated with real-world experimentation. This abstraction is crucial not only for the initial training phases but also for the evaluation and benchmarking of RL algorithms, ensuring that agents can generalize their learned policies across diverse and complex tasks.

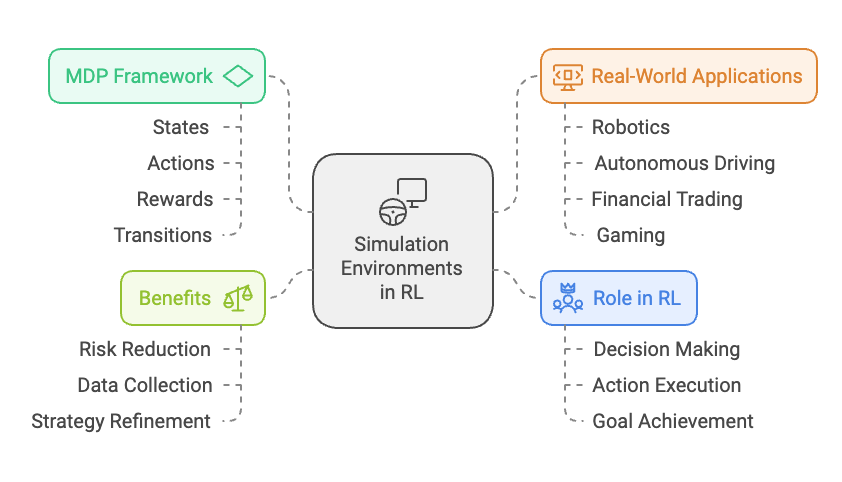

Figure 1: Scopes and applications of simulation in Reinforcement Learning.

The necessity of simulation environments in RL cannot be overstated. In real-world applications such as robotics, autonomous driving, and financial trading, deploying untested agents can lead to significant risks, including physical damage, financial loss, or unintended behaviors. Simulations provide a sandbox where agents can explore a wide range of scenarios, learn from their interactions, and refine their strategies iteratively. Moreover, simulations enable extensive data collection and experimentation, which are often impractical or impossible to achieve in real-world settings due to constraints like time, cost, and safety concerns. By offering a versatile and scalable testing ground, simulation environments accelerate the development and deployment of robust RL solutions.

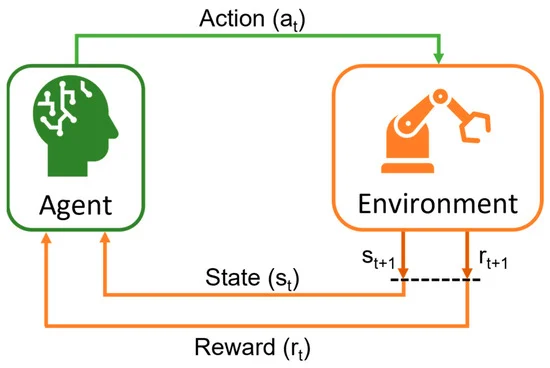

At the heart of any RL system lies the interaction loop between the agent and the environment. This loop is a continuous cycle where the agent perceives the current state of the environment, selects an action based on its policy, and receives feedback in the form of rewards and new states. Mathematically, this interaction can be modeled using Markov Decision Processes (MDPs), which provide a formal framework for decision-making in stochastic environments. An MDP is defined by a set of states $S$, a set of actions $A$, transition probabilities $P(s'|s,a)$, reward functions $R(s,a)$, and a discount factor $\gamma$. The Markov property ensures that the future state depends only on the current state and action, not on the sequence of events that preceded it, thereby simplifying the complexity of the learning process.

In an RL environment, the state $s$ represents the current situation of the agent within the environment, encapsulating all relevant information needed to make a decision. Actions $a$ are the possible moves or operations the agent can perform, influencing the transition to subsequent states. Rewards $r$ are scalar feedback signals that guide the agent towards desirable behaviors by quantifying the immediate benefit or cost of actions. Transitions $P(s'|s,a)$ describe the probability of moving to a new state $s'$ given the current state $s$ and action $a$, encapsulating the stochastic nature of real-world environments. Together, these components form the backbone of the RL framework, enabling agents to learn optimal policies $\pi(a|s)$ that maximize cumulative rewards over time.

Figure 2: Key ideas of Reinforcement Learning. Simulation is required for the environment.

Simulation environments are pivotal in a multitude of real-world applications where RL can be transformative. In robotics, simulations allow for the training of complex motor control systems that can later be deployed on physical robots, minimizing the risk of hardware damage and reducing development time. Autonomous vehicles benefit from simulations by exposing driving agents to a vast array of traffic scenarios, weather conditions, and unexpected obstacles, fostering the development of safe and reliable navigation policies. In the financial sector, RL agents can be trained in simulated trading environments to devise strategies that optimize portfolio management and trading decisions without the financial risks associated with live trading. Additionally, gaming and entertainment industries utilize simulation environments to create intelligent non-player characters (NPCs) that enhance user experiences by adapting to player behaviors in real-time.

Throughout this introductory section, you will embark on a comprehensive journey through the landscape of simulation environments in reinforcement learning. We will begin by delving into the mathematical foundations of RL environments, elucidating the principles of Markov Decision Processes and the intricacies of state and action spaces. The chapter will then explore prominent simulation frameworks such as OpenAI Gym and Farama Gymnasium, dissecting their architectural designs and conceptual abstractions. Recognizing the current limitations of Rust-based crates for these frameworks, we will investigate innovative integration strategies that bridge Python and Rust, leveraging the strengths of both languages to create robust and efficient RL environments. Practical implementation sections will provide hands-on guidance, featuring Rust code examples that utilize relevant crates to build and optimize custom simulation environments. By the end of this chapter, readers will possess a deep understanding of the theoretical underpinnings, conceptual frameworks, and practical skills necessary to design, implement, and evaluate sophisticated simulation environments for reinforcement learning.

To rigorously define simulation environments in RL, we turn to the mathematical framework of Markov Decision Processes (MDPs). An MDP provides a structured approach to modeling decision-making scenarios where outcomes are partly random and partly under the control of an agent. Formally, an MDP is represented as a tuple $(S, A, P, R, \gamma)$, where:

$S$ is a finite or infinite set of states representing all possible configurations of the environment.

$A$ is a finite or infinite set of actions available to the agent.

$P: S \times A \times S \rightarrow [0,1]$ denotes the state transition probabilities, where $P(s'|s,a)$ is the probability of transitioning to state $s'$ when action $a$ is taken in state $s$.

$R: S \times A \rightarrow \mathbb{R}$ is the reward function, assigning a real-valued reward to each state-action pair.

$\gamma \in [0,1)$ is the discount factor that quantifies the importance of future rewards.

The goal of the agent is to learn a policy $\pi: S \times A \rightarrow [0,1]$ that maximizes the expected cumulative discounted reward over time. The Bellman equation plays a pivotal role in this optimization, providing a recursive relationship for the value function $V^\pi(s)$, which represents the expected return starting from state $s$ and following policy $\pi$:

$$ V^\pi(s) = \sum_{a \in A} \pi(a|s) \left[ R(s,a) + \gamma \sum_{s' \in S} P(s'|s,a) V^\pi(s') \right] $$

This equation encapsulates the trade-off between immediate rewards and future value, guiding the agent towards optimal decision-making. Understanding and effectively utilizing these mathematical foundations is essential for designing robust simulation environments that accurately reflect the complexities of real-world scenarios.

Building upon the foundational framework of Markov Decision Processes (MDPs), it is essential to recognize that many real-world scenarios present complexities that extend beyond the assumptions inherent in MDPs. Specifically, in numerous applications, an agent does not have full visibility of the environment's state. This limitation gives rise to the more sophisticated framework of Partially Observable Markov Decision Processes (POMDPs), which provide a more realistic and nuanced model for decision-making under uncertainty.

A Partially Observable Markov Decision Process (POMDP) extends the MDP framework by incorporating the notion that the agent receives only partial information about the true state of the environment. Formally, a POMDP is defined as a tuple $(S, A, P, R, \Omega, O, \gamma)$, where:

$S$, $A$, $P$, $R$, and $\gamma$ retain their definitions from the MDP framework, representing the set of states, actions, state transition probabilities, reward function, and discount factor, respectively.

$\Omega$ is a finite or infinite set of observations that the agent can receive.

$O: S \times A \times \Omega \rightarrow [0,1]$ denotes the observation probabilities, where $O(o|s',a)$ is the probability of observing $o$ given that the agent took action aaa and transitioned to state $s'$.

In a POMDP, the agent does not directly observe the underlying state $s \in S$. Instead, after taking an action $a$, the agent receives an observation $o \in \Omega$ that provides partial information about the new state $s'$. This partial observability introduces significant challenges in both the representation and computation of optimal policies, as the agent must infer the hidden state based on the history of actions and observations.

The introduction of observations transforms the agent's knowledge from being state-based to belief-based. A belief state $b$ is a probability distribution over all possible states, representing the agent's current estimate of the environment's state given its history of actions and observations. The belief update process involves Bayesian inference, where the agent updates its belief state based on the new observation received after taking an action. Mathematically, the belief update can be expressed as:

$$ b'(s') = \frac{O(o|s',a) \sum_{s \in S} P(s'|s,a) b(s)}{P(o|a,b)} $$

where $P(o|a,b)$ is the probability of receiving observation $o$ given action $a$ and belief state $b$.

Solving POMDPs is inherently more complex than solving MDPs due to the additional layer of uncertainty introduced by partial observability. The optimal policy in a POMDP must map belief states to actions, rather than mapping concrete states to actions as in MDPs. This shift necessitates more advanced algorithms and representations, such as belief state approximation, policy search methods, and the use of recurrent neural networks to maintain an internal state that captures historical information.

In the context of simulation environments, incorporating POMDPs allows for the creation of more realistic and challenging scenarios where the agent must operate under uncertainty and make inferences based on incomplete information. For instance, in autonomous driving simulations, an agent may not have perfect visibility of all surrounding vehicles and obstacles due to sensor limitations or environmental conditions like fog and rain. Similarly, in robotics, an agent may only have access to noisy sensor data, requiring it to infer the true state of its environment to perform tasks effectively.

By modeling environments as POMDPs, researchers and practitioners can develop and evaluate RL algorithms that are better suited for real-world applications where uncertainty and partial observability are the norms rather than the exceptions. This advancement not only enhances the robustness and adaptability of RL agents but also bridges the gap between theoretical research and practical deployment in complex, dynamic environments.

To bridge theory with practice, let us explore the implementation of a simple RL environment in Rust. We'll utilize the gym-rs crate, a Rust binding for OpenAI Gym, to create a custom environment. This example will demonstrate defining state and action spaces, implementing environment dynamics, and integrating with an RL agent.

Now, let's implement a basic grid world environment. The provided code simulates a basic reinforcement learning environment called "GridWorld," where an agent navigates a 5x5 grid to reach a predefined goal. The environment is structured as a grid with a start point at the top-left corner (0,0) and a goal at the bottom-right corner (4,4). The agent can take one of four actions—move up, down, left, or right—until it either reaches the goal or exhausts its moves.

[dependencies]

gym-rs = "0.3.0"

rand = "0.8.5"

use gym_rs::{Action, Env, Observation, Reward};

use rand::Rng;

/// Represents the state of the agent in the grid.

#[derive(Debug, Clone, PartialEq)]

struct GridState {

x: usize,

y: usize,

}

/// Defines the possible actions the agent can take.

#[derive(Clone)]

enum GridAction {

Up,

Down,

Left,

Right,

}

/// Custom GridWorld environment implementing the Env trait.

struct GridWorld {

state: GridState,

goal: GridState,

grid_size: usize,

}

impl GridWorld {

/// Initializes a new GridWorld environment.

fn new(grid_size: usize) -> Self {

GridWorld {

state: GridState { x: 0, y: 0 },

goal: GridState {

x: grid_size - 1,

y: grid_size - 1,

},

grid_size,

}

}

/// Resets the environment to the initial state.

fn reset(&mut self) -> GridState {

self.state = GridState { x: 0, y: 0 };

self.state.clone()

}

/// Applies an action and returns the new state, reward, and done flag.

fn step(&mut self, action: &GridAction) -> (GridState, Reward, bool) {

match action {

GridAction::Up if self.state.y > 0 => self.state.y -= 1,

GridAction::Down if self.state.y < self.grid_size - 1 => self.state.y += 1,

GridAction::Left if self.state.x > 0 => self.state.x -= 1,

GridAction::Right if self.state.x < self.grid_size - 1 => self.state.x += 1,

_ => (), // Invalid move, no state change

}

// Reward structure: -1 for each step, +10 for reaching the goal

let reward = if self.state == self.goal {

10.0

} else {

-1.0

};

// Episode is done if the agent reaches the goal

let done = self.state == self.goal;

(self.state.clone(), reward, done)

}

}

impl Env for GridWorld {

type State = GridState;

type Action = GridAction;

fn reset(&mut self) -> Observation<Self::State> {

Observation::new(self.reset())

}

fn step(&mut self, action: Action<Self::Action>) -> (Observation<Self::State>, Reward, bool) {

let (new_state, reward, done) = self.step(&action.unwrap());

(Observation::new(new_state), reward, done)

}

}

fn main() {

let mut env = GridWorld::new(5);

let mut rng = rand::thread_rng();

// Reset the environment

let mut observation = env.reset();

println!("Initial State: {:?}", observation.state);

// Simple random agent

loop {

// Choose a random action

let action = Action::new(match rng.gen_range(0..4) {

0 => GridAction::Up,

1 => GridAction::Down,

2 => GridAction::Left,

_ => GridAction::Right,

});

// Take a step in the environment

let (new_observation, reward, done) = env.step(action);

println!(

"Action: {:?}, New State: {:?}, Reward: {}, Done: {}",

action.unwrap(), new_observation.state, reward, done

);

observation = new_observation;

if done {

println!("Goal reached!");

break;

}

}

}

The provided Rust code constructs a reinforcement learning environment using the gym_rs crate, which offers standardized interfaces for agent-environment interactions. At its core, the GridWorld environment represents the agent's state within a 5x5 grid using the GridState struct, defined by x and y coordinates. The GridAction enum specifies the agent's possible actions—moving up, down, left, or right—while the GridWorld struct implements the Env trait, encapsulating the current state, goal state, and grid size to define the environment's structure and dynamics. The reset method initializes the agent's position at (0, 0), setting up the environment for a new episode. The step method processes the agent's selected action, updates its state, calculates rewards (-1 per step, +10 for reaching the goal), and determines if the goal is achieved. Additionally, the rand crate is used to simulate a random agent, choosing actions arbitrarily. The main function demonstrates this interaction loop, highlighting the fundamentals of reinforcement learning and Rust's capability to create efficient, robust simulation environments.

This example illustrates the fundamental components of an RL environment: state representation, action definitions, environment dynamics, and reward structures. By implementing the Env trait, we ensure compatibility with RL algorithms that can interact seamlessly with the environment. Leveraging Rust’s performance and safety features, this custom environment provides a robust foundation for training and evaluating RL agents. When running the code, the agent begins at (0, 0) and performs random moves until it reaches the goal at (4, 4). The output logs each action, the resulting state, the immediate reward, and whether the goal has been achieved. The program demonstrates key reinforcement learning principles, including state transitions and a simple reward system. The random agent is inefficient, often taking many steps to reach the goal. This highlights the importance of more advanced strategies, such as policy optimization, for achieving better performance in similar tasks.

Simulation environments extend their utility across a myriad of real-world applications, each benefiting uniquely from the controlled and adaptable nature of simulations. In robotics, for instance, simulation environments enable the training of complex motor control systems without the wear and tear associated with physical hardware. Robots can practice navigation, manipulation, and interaction tasks in diverse simulated terrains, accelerating the development cycle and ensuring safety before deployment in real-world settings.

Autonomous vehicles represent another critical domain where simulation environments are indispensable. These environments allow for the testing of driving policies under a vast array of conditions, including different weather scenarios, traffic densities, and unexpected obstacles. By simulating rare and hazardous situations, developers can train autonomous systems to handle edge cases that would be impractical or dangerous to replicate in real life. This comprehensive training enhances the reliability and safety of autonomous driving technologies.

In the financial sector, RL agents trained within simulation environments can devise sophisticated trading strategies and portfolio management techniques. Simulated trading environments can mimic market conditions, economic indicators, and investor behaviors, providing a rich dataset for agents to learn from. This approach minimizes the financial risks associated with live trading experiments and allows for extensive backtesting of strategies across various market scenarios.

Gaming and entertainment industries also leverage simulation environments to create intelligent non-player characters (NPCs) that adapt to player behaviors in real-time. By training RL agents within simulated game environments, developers can enhance the realism and challenge of NPCs, providing more engaging and dynamic gaming experiences. Additionally, multi-agent simulations facilitate the development of cooperative and competitive behaviors, enriching the interactive elements of games.

These examples underscore the versatility and critical importance of simulation environments in advancing RL applications. By providing a versatile platform for experimentation, training, and evaluation, simulation environments empower developers and researchers to push the boundaries of what RL agents can achieve across diverse and complex tasks.

Recognizing the current limitations of Rust-based crates for these frameworks, we will explore innovative integration strategies that bridge Python and Rust, harnessing the strengths of both languages to create robust and efficient RL environments. Practical implementation sections will provide hands-on guidance, featuring Rust code examples that utilize relevant crates to build and optimize custom simulation environments. These sections will demonstrate how to define state and action spaces, implement environment dynamics, and integrate performance-critical components using Rust, all while maintaining compatibility with Python-based RL algorithms.

Below is another Rust code for the custom GridWorld environment, utilizing the gym-rs crate. The main differences compared to previous code lie in their implementation of the gym_rs environment and their complexity. This code is more comprehensive and uses more advanced Rust features, such as implementing additional traits like Sample, Serialize, and Deserialize, and includes more detailed space definitions with Discrete and BoxR observation spaces. It also introduces more robust randomization with Pcg64, provides a metadata method, adds rendering capabilities, and includes more explicit type handling with ActionReward and EnvProperties traits. In contrast, the previous code is a simpler, more straightforward implementation of the same GridWorld environment, with less explicit type definitions and fewer trait implementations, focusing on the core mechanics of the grid-based reinforcement learning scenario.

The code implements a customized RL environment called GridWorld, where an agent navigates a grid from a starting point $(0,0)$ to a goal point at the bottom-right corner. The environment is structured using the gym_rs crate, following the standard gym-like interface with methods for resetting the environment and taking steps. The agent uses a random action selection strategy, choosing between moving up, down, left, or right in each iteration. The environment provides a reward system where the agent receives -1 for each move and +10 for reaching the goal, with the episode terminating once the goal is achieved. The code demonstrates a basic reinforcement learning setup, showing how to create a custom environment, implement the Env trait, and simulate an agent's interactions with the environment through random exploration.

[dependencies]

gym-rs = "0.3.0"

rand = "0.8.5"

rand_pcg = "0.3.1"

serde = "1.0.216"

serde_json = "1.0.133"

use gym_rs::{

core::{ActionReward, Env, EnvProperties},

spaces::{BoxR, Discrete},

utils::custom::structs::Metadata,

utils::custom::traits::Sample, // Import Sample from the correct location

utils::renderer::{RenderMode, Renders},

};

use rand::Rng;

use rand_pcg::Pcg64; // Use a publicly accessible RNG from rand_pcg

use serde::{Deserialize, Serialize};

#[derive(Debug, Clone, PartialEq, Serialize, Deserialize)]

struct GridState {

x: usize,

y: usize,

}

// Implement required traits for the observation type

impl Sample for GridState {}

impl Into<Vec<f64>> for GridState {

fn into(self) -> Vec<f64> {

vec![self.x as f64, self.y as f64]

}

}

#[derive(Debug, Clone, Serialize, Deserialize)]

enum GridAction {

Up,

Down,

Left,

Right,

}

#[derive(Debug, Clone, Serialize, Deserialize)]

struct GridWorld {

state: GridState,

goal: GridState,

grid_size: usize,

#[serde(skip)]

action_space: Discrete,

#[serde(skip)]

observation_space: BoxR<f64>,

#[serde(skip)]

metadata: Metadata<Self>,

#[serde(skip)]

random: Pcg64, // Use Pcg64 instead of Lcg128Xsl64

}

impl GridWorld {

fn new(grid_size: usize) -> Self {

let action_space = Discrete { n: 4 };

let observation_space = BoxR::<f64>::new(

vec![0.0, 0.0],

vec![(grid_size - 1) as f64, (grid_size - 1) as f64],

);

let metadata = Metadata::default();

let random = Pcg64::seed_from_u64(0);

GridWorld {

state: GridState { x: 0, y: 0 },

goal: GridState {

x: grid_size - 1,

y: grid_size - 1,

},

grid_size,

action_space,

observation_space,

metadata,

random,

}

}

fn move_agent(&mut self, action: &GridAction) {

match action {

GridAction::Up if self.state.y > 0 => self.state.y -= 1,

GridAction::Down if self.state.y < self.grid_size - 1 => self.state.y += 1,

GridAction::Left if self.state.x > 0 => self.state.x -= 1,

GridAction::Right if self.state.x < self.grid_size - 1 => self.state.x += 1,

_ => (),

}

}

}

impl Env for GridWorld {

type Action = GridAction;

type Observation = GridState;

type ResetInfo = ();

type Info = ();

fn reset(

&mut self,

_seed: Option<u64>,

_flag: bool,

_options: Option<BoxR<Self::Observation>>, // Updated type here

) -> (Self::Observation, Option<Self::ResetInfo>) {

self.state = GridState { x: 0, y: 0 };

(self.state.clone(), None)

}

fn step(

&mut self,

action: Self::Action,

) -> ActionReward<Self::Observation, Self::Info> {

self.move_agent(&action);

let reward = if self.state == self.goal { 10.0 } else { -1.0 };

let done = self.state == self.goal;

(self.state.clone(), reward, done, ())

}

fn render(&mut self, _mode: RenderMode) -> Renders {

println!("Current state: {:?}", self.state);

Renders::None

}

fn close(&mut self) {}

}

impl EnvProperties for GridWorld {

type ActionSpace = Discrete;

type ObservationSpace = BoxR<f64>;

fn metadata(&self) -> &Metadata<Self> {

&self.metadata

}

fn rand_random(&self) -> &Pcg64 {

&self.random

}

fn action_space(&self) -> &Self::ActionSpace {

&self.action_space

}

fn observation_space(&self) -> &Self::ObservationSpace {

&self.observation_space

}

}

fn main() {

let mut env = GridWorld::new(5);

let mut rng = rand::thread_rng();

let (mut observation, _) = env.reset(None, true, None);

println!("Initial State: {:?}", observation);

loop {

let action = match rng.gen_range(0..4) {

0 => GridAction::Up,

1 => GridAction::Down,

2 => GridAction::Left,

_ => GridAction::Right,

};

let (new_observation, reward, done, _) = env.step(action.clone());

println!(

"Action: {:?}, New State: {:?}, Reward: {}, Done: {}",

action, new_observation, reward, done

);

observation = new_observation;

if done {

println!("Goal reached!");

break;

}

}

}

The GridWorld environment in this Rust code is a structured playground for reinforcement learning, simulating an agent's navigation through a grid. At its core, the GridState struct tracks the agent's position using x and y coordinates, while the GridAction enum defines the possible movements: up, down, left, and right. The GridWorld struct manages the environment's state, including the current position, goal location, and grid size. When initialized, it sets up a grid where the agent starts at (0, 0) and aims to reach the bottom-right corner. The reset method returns the agent to the starting point, preparing for a new episode, and the step method processes the agent's actions by updating its position, calculating rewards (positive for reaching the goal, negative for each move), and determining when the episode ends. The main function demonstrates this by creating an agent that randomly explores the grid, moving and receiving feedback until it successfully reaches the goal, showcasing a basic yet fundamental approach to reinforcement learning interaction.

This implementation serves as a foundational example of how to create custom RL environments in Rust, leveraging the safety and performance benefits of the language. By adhering to the Gym interface, this environment can seamlessly integrate with a wide range of RL agents and algorithms, facilitating robust and efficient training processes.

The introduction to simulation environments lays the groundwork for understanding the pivotal role these environments play in the development and evaluation of reinforcement learning agents. By defining simulation environments through the lens of Markov Decision Processes, we establish a rigorous mathematical framework that captures the essence of decision-making under uncertainty. The exploration of the RL interaction loop and its core components—states, actions, rewards, and transitions—provides a clear conceptual model that underpins all RL algorithms.

Through practical implementation in Rust, we bridge the gap between theory and practice, demonstrating how to construct a simple yet effective RL environment that adheres to established frameworks like OpenAI Gym. This hands-on example not only reinforces the theoretical concepts but also highlights the advantages of using Rust for building high-performance and reliable simulation environments. As we progress through the chapter, we will delve deeper into more complex environments, integration strategies between Python and Rust, and advanced techniques for optimizing and scaling RL simulations.

Ultimately, mastering simulation environments is essential for anyone seeking to advance in the field of reinforcement learning. These environments provide the necessary infrastructure for training agents, testing hypotheses, and benchmarking algorithm performance, all while ensuring that the learning processes are both safe and scalable. By equipping readers with both the theoretical knowledge and practical skills to design and implement sophisticated simulation environments, this chapter serves as a cornerstone for developing robust and effective RL systems that can tackle real-world challenges with confidence and precision.

20.2. Prominent Simulation Frameworks

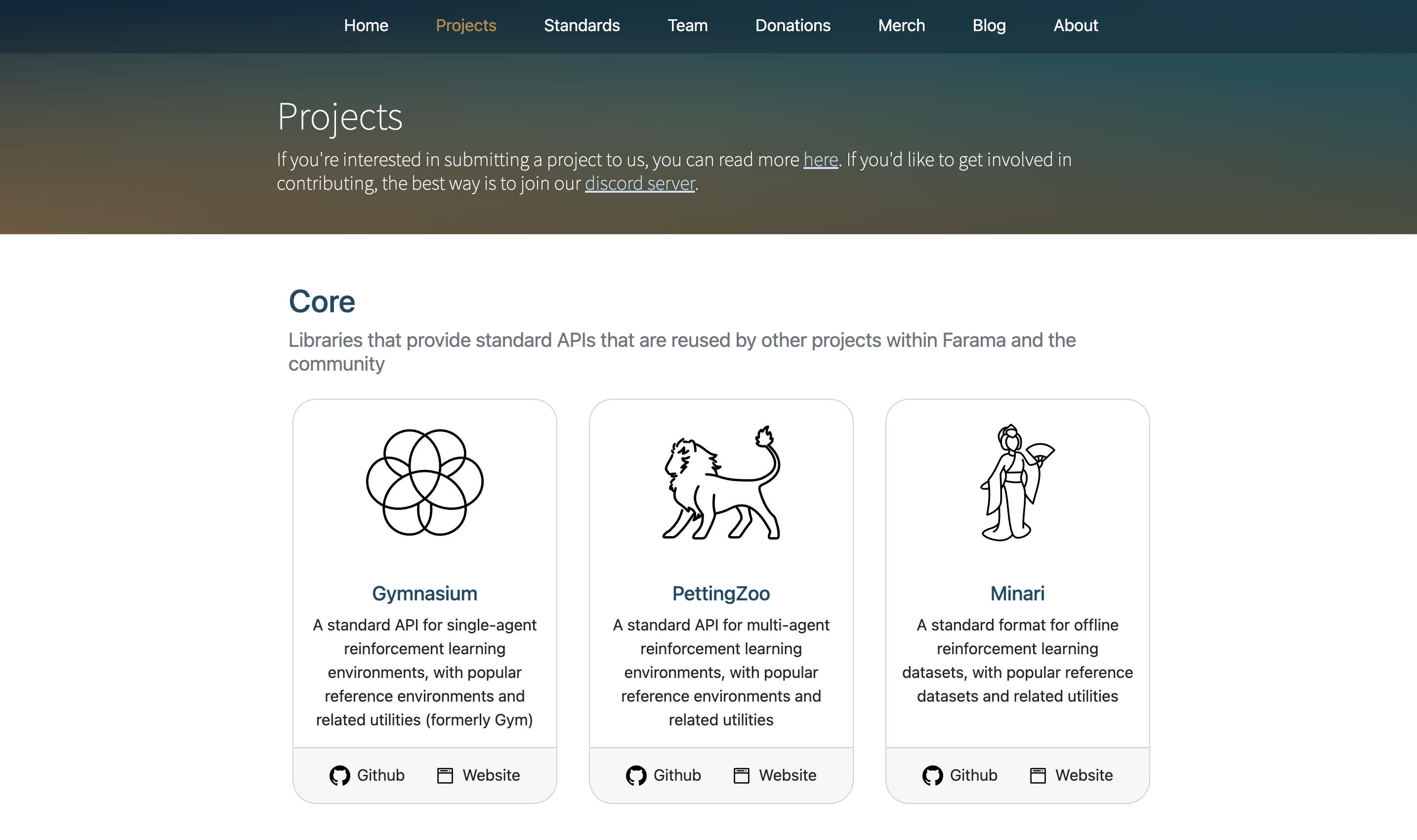

The history and evolution of reinforcement learning simulation frameworks have been shaped by the growing need to standardize, benchmark, and accelerate research in complex decision-making environments. In the early days of RL, researchers often had to craft their own environments, leading to a proliferation of ad-hoc implementations that were difficult to compare or reproduce. The arrival of standardized frameworks like OpenAI Gym revolutionized the field by providing a unified interface for interacting with a wide variety of simulated tasks. OpenAI Gym emerged as a response to the community’s call for better benchmarks, offering a consistent environment API and a diverse suite of tasks ranging from classic control problems to high-dimensional Atari games. More recently, the Farama Gymnasium project—an effort led by the RL community—aims to extend, refine, and modernize the Gym interface, addressing legacy issues and incorporating new design principles. By integrating lessons learned from Gym’s widespread adoption and feedback from researchers, Gymnasium builds upon its predecessor’s successes and sets the stage for even more robust and flexible RL simulation frameworks.

Figure 3: Farama projects - the World's Open Source Reinforcement Learning Tools (www.farama.org).

From a conceptual standpoint, frameworks like Gym and Gymnasium are popular because they implement a clear and consistent abstraction that aligns neatly with the Markov Decision Process (MDP) formulation. In an MDP, an agent interacts with an environment defined by a set of states $S$, a set of actions $A$, a transition function $P(s'|s,a)$, and a reward function $R(s,a)$. The agent selects an action $a$ based on the current state $s$ and receives a reward $r$ along with a new state $s'$. Over time, the agent’s goal is to learn a policy $\pi(a|s)$ that maximizes the expected sum of discounted rewards. Frameworks like Gym and Gymnasium encapsulate this cycle with a standardized interface, typically providing reset() and step() functions. The reset() function returns an initial state observation from the environment, while step(action) takes an action and returns the next observation, the received reward, a boolean flag indicating whether the episode has ended, and additional diagnostic information. This tight alignment with the MDP structure greatly simplifies implementation details, allowing researchers to focus on algorithm development rather than environment engineering.

Mathematically, these frameworks make the MDP formulation more tangible. Consider an agent interacting with an environment: at each time step $t$, the environment returns a state $s_t$. The agent picks an action $a_t$, and the environment responds with a new state $s_{t+1}$ drawn from the probability distribution $P(s_{t+1}|s_t,a_t)$ and a reward $r_t = R(s_t,a_t)$. Over an episode, the agent accumulates rewards $\sum_t \gamma^t r_t$, where $\gamma \in [0,1)$ is the discount factor. By providing a consistent API, Gym and Gymnasium handle the complexities of state transitions, reward computations, and environmental bookkeeping, leaving the researcher free to experiment with different policies, function approximators, and training schemes. Though the underlying mathematics might seem abstract, the frameworks distill this complexity into a few intuitive methods and a well-defined data flow.

On a practical level, selecting the right framework for a given research or development need can be guided by a comparison of their features. OpenAI Gym boasts a wide variety of environments and a large user community, making it an excellent starting point for many researchers. Farama Gymnasium, on the other hand, refines the API and introduces improved environment wrappers, clearer versioning, and better extensibility. A rough comparison of their features is presented below:

~~~{list-table} :header-rows: 1 :name: F7pF9vgn4H

* - Feature

OpenAI Gym

Farama Gymnasium

* - Environment Diversity

Extensive (Atari, MuJoCo, Classic Control, etc.)

Comparable, with plans to expand and modernize

* - API Stability

Mature but some legacy issues

Modernized API, improved wrapper design

* - Community & Ecosystem

Large user base, many external tools

Growing community, building upon Gym’s legacy

* - Extensibility & Modularity

Good, but certain patterns are now outdated

Enhanced extensibility and clearer best practices

* - Python Integration

Native Python environments

Python-centric, aiming for multi-language support

* - Versioning & Benchmarking

Basic versioning, no leaderboards

Clear versioning, community-driven curation and standardization

When choosing a framework, one might consider criteria such as the complexity of the tasks at hand, the desired level of extensibility, compatibility with existing libraries, and community support. For those embarking on a new RL project, Gym remains an excellent entry point due to its widespread adoption and extensive documentation. For researchers looking to embrace cutting-edge design principles and more flexible integration with future environment collections, Gymnasium provides a promising alternative. Ultimately, the decision depends on balancing current research needs, computational resources, and the long-term vision for the project.

While Gym and Gymnasium are primarily Python-based ecosystems, the RL community continuously explores polyglot and high-performance approaches. The gym_rs is a Rust implementation of environments inspired by OpenAI's Gym, which is widely used for developing and comparing reinforcement learning (RL) algorithms. This library provides simulation environments for various control tasks, enabling developers to interact programmatically with these environments while focusing on creating, training, and evaluating RL agents. It supports classical control problems like MountainCar, CartPole, and others, providing tools for rendering, state observation, and reward-based decision-making in a modular and efficient way.

use gym_rs::{

core::{ActionReward, Env},

envs::classical_control::mountain_car::MountainCarEnv,

utils::renderer::RenderMode,

};

use rand::{thread_rng, Rng};

fn main() {

let mut mc = MountainCarEnv::new(RenderMode::Human);

let _state = mc.reset(None, false, None);

let mut rng = thread_rng();

let mut end: bool = false;

let mut episode_length = 0;

while !end {

if episode_length > 200 {

break;

}

let action = rng.gen_range(0..3);

let ActionReward { done, .. } = mc.step(action);

episode_length += 1;

end = done;

println!("episode_length: {}", episode_length);

}

mc.close();

for _ in 0..200 {

let action = rng.gen_range(0..3);

mc.step(action);

episode_length += 1;

println!("episode_length: {}", episode_length);

}

}

The provided code demonstrates the use of gym_rs to interact with the MountainCar environment, a classic RL problem where an agent must learn to push a car up a mountain using limited momentum and gravity. The environment is initialized in human rendering mode, and the car's state is reset at the beginning. A random policy is used to choose actions (0, 1, or 2, corresponding to left, no action, or right), and the simulation steps forward with each action. The episode ends when the car reaches the goal or exceeds 200 time steps. The episode_length counter keeps track of the number of actions taken, and the program prints this value after each step. The loop ensures the environment closes properly and performs an additional set of actions for demonstration purposes.

The next code demonstrates the use of the gym_rs library to interact with the CartPole environment, a classic reinforcement learning (RL) task. The CartPole task involves balancing a pole on a moving cart by applying left or right forces, where the agent receives rewards for keeping the pole upright and penalized if the pole falls or the cart moves out of bounds. The environment is initialized in human rendering mode, and the simulation runs multiple episodes, using random actions to explore the environment.

use gym_rs::{

core::Env, envs::classical_control::cartpole::CartPoleEnv, utils::renderer::RenderMode,

};

use ordered_float::OrderedFloat;

use rand::{thread_rng, Rng};

fn main() {

let mut env = CartPoleEnv::new(RenderMode::Human);

env.reset(None, false, None);

const N: usize = 15;

let mut rewards = Vec::with_capacity(N);

let mut rng = thread_rng();

for _ in 0..N {

let mut current_reward = OrderedFloat(0.);

for _ in 0..475 {

let action = rng.gen_range(0..=1);

let state_reward = env.step(action);

current_reward += state_reward.reward;

if state_reward.done {

break;

}

}

env.reset(None, false, None);

rewards.push(current_reward);

}

println!("{:?}", rewards);

}

The program initializes the CartPole environment and runs a series of episodes (N = 15). In each episode, the agent takes up to 475 steps, choosing random actions (0 for left or 1 for right) and accumulating rewards based on the environment's feedback. The cumulative reward for each episode is tracked using the OrderedFloat wrapper to ensure proper handling of floating-point comparisons. If the episode ends prematurely due to the pole falling or the cart moving out of bounds, the environment resets to start a new episode. After all episodes are completed, the program prints the collected rewards for each episode, showcasing the agent's random performance in the CartPole environment.

The next code connects to a running Gym server via gym-rs, a Rust crate providing interfaces to Gym environments through a REST API. After creating an instance of a classic control environment like CartPole-v1, the code resets the environment to obtain the initial observation. It then enters a loop, choosing random actions at each step and printing out the resulting observations, rewards, and termination signals. Although this example uses a simplistic random policy, it clearly demonstrates the RL interaction loop in a Rust-based setting. By substituting random actions with learned policies, researchers can integrate advanced RL algorithms implemented in Rust, harnessing both the performance and safety benefits of the language while interacting seamlessly with popular RL frameworks.

use gym_rs::core::Env;

use gym_rs::envs::remote::RemoteEnv;

use rand::{thread_rng, Rng};

fn main() {

// Connect to a running Gym server. Make sure you have a Gym HTTP server running,

// for example using the gym_http_server tool provided by gym-http-api or similar.

// Adjust the URL and environment ID as needed.

let mut env = RemoteEnv::new("CartPole-v1", "http://127.0.0.1:5000", None)

.expect("Failed to connect to the remote Gym server.");

// Reset the environment to get the initial observation.

let mut observation = env.reset(None, false, None);

println!("Initial observation: {:?}", observation);

let mut rng = thread_rng();

// Run a loop of interaction steps. Press Ctrl+C to stop.

loop {

// Select a random action. For CartPole-v1, valid actions are typically 0 or 1.

let action = rng.gen_range(0..2);

// Take a step in the environment using the chosen action.

let step_result = env.step(action);

// Print the result of the step: observation, reward, and whether the episode ended.

println!(

"Action: {}, Observation: {:?}, Reward: {}, Done: {}",

action,

step_result.observation,

step_result.reward,

step_result.done

);

// If the episode ended, reset the environment.

if step_result.done {

observation = env.reset(None, false, None);

println!("Episode finished. Resetting environment. New initial observation: {:?}", observation);

}

}

}

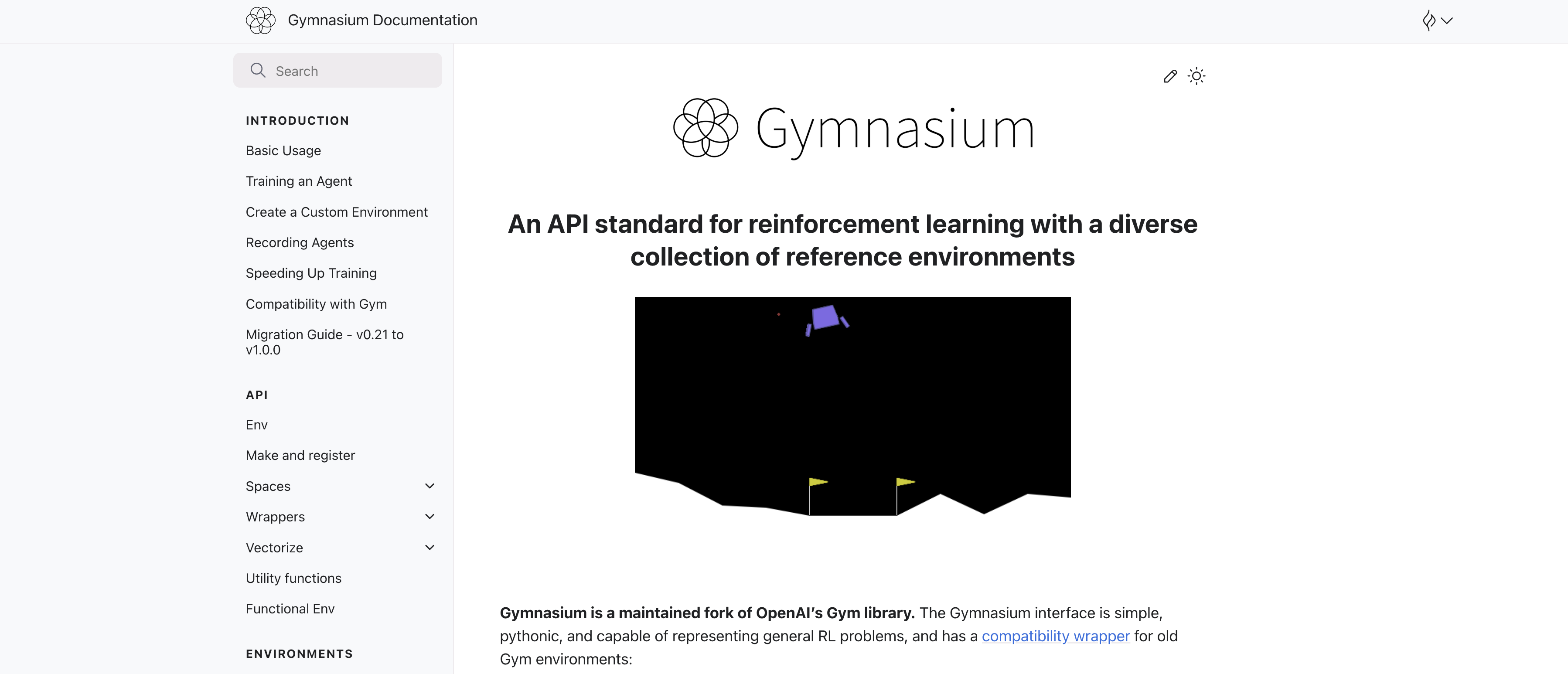

Gymnasium is a robust and actively maintained fork of OpenAI’s seminal Gym library, designed to serve as a comprehensive API for single-agent reinforcement learning (RL) environments. With its simple, pythonic interface, Gymnasium excels in representing a wide array of RL problems, ranging from classical control tasks like CartPole, Pendulum, and MountainCar to more complex environments such as MuJoCo and Atari games. One of its standout features is the compatibility wrapper, which ensures seamless integration with legacy Gym environments, thereby facilitating the transition for projects and researchers accustomed to the original Gym framework. Gymnasium's architecture revolves around the Env class, a high-level Python class that encapsulates the essence of a Markov Decision Process (MDP) as understood in reinforcement learning theory. Although it does not capture every nuance of MDPs, the Env class provides essential functionalities: generating initial states, handling state transitions based on agent actions, and offering visualization capabilities through rendering.

Figure 4: Gymnasium project of Farama Foundation.

At the heart of Gymnasium are four key functions that define the agent-environment interaction loop: make(), Env.reset(), Env.step(), and Env.render(). The make() function is used to instantiate a specific environment, providing a straightforward way to select from the multitude of available tasks. Once an environment is created, Env.reset() initializes it to a starting state, preparing it for a new episode. The Env.step(action) function allows the agent to take an action within the environment, resulting in a transition to a new state, the receipt of a reward, and a flag indicating whether the episode has terminated. Finally, Env.render() offers a visualization of the current state of the environment, which is invaluable for debugging and monitoring the agent’s performance.

Complementing the Env class are various Wrapper classes that enhance and modify the environment's behavior without altering its core functionalities. These wrappers can modify observations, rewards, and actions, providing flexibility for preprocessing and feature extraction, which are crucial for developing sophisticated RL agents. Gymnasium's design emphasizes modularity and extensibility, allowing researchers and developers to easily augment environments to suit their specific needs.

In the context of reinforcement learning, Gymnasium embodies the classic "agent-environment loop." This fundamental concept illustrates how an agent interacts with its environment: the agent receives an observation about the current state of the environment, selects an action based on this observation, and then the environment responds by transitioning to a new state and providing a corresponding reward. This cycle continues iteratively until the environment signals termination, marking the end of an episode. Gymnasium's intuitive API facilitates this interaction loop, making it an essential tool for experimenting with and advancing RL algorithms. By leveraging Gymnasium, researchers can focus on developing and refining their RL models, confident in the library's ability to handle the complexities of environment management and interaction.

Recently, gymnasium_rs is a promosing and evolving project that aims to provide a pure Rust implementation of the Gymnasium API, the widely recognized framework for reinforcement learning (RL) environments originally developed in Python. By mirroring the Gymnasium interface, gymnasium_rs seeks to offer Rust developers a familiar and efficient toolkit for designing, interacting with, and managing RL environments, leveraging Rust's performance, safety, and concurrency advantages. This implementation ensures compatibility and interoperability with the existing Python-based Gymnasium environments, enabling seamless integration between Rust and Python ecosystems. Through features such as compatibility wrappers and standardized API functions (make(), reset(), step(), and render()), gymnasium_rs facilitates the use of established Gymnasium environments like CartPole, MountainCar, and Atari within Rust applications. Additionally, it supports the creation of custom environments, allowing researchers and developers to build and experiment with RL algorithms in Rust while maintaining the ability to utilize Python’s extensive RL libraries and tools. Although still a work in progress, gymnasium_rs is progressively enhancing its feature set, addressing compatibility issues, and optimizing performance to provide a robust foundation for Rust-based RL projects. By bridging the gap between Rust’s systems-level capabilities and Gymnasium’s comprehensive RL environment suite, gymnasium_rs holds the promise of empowering Rust developers to harness the full potential of reinforcement learning with the added benefits of Rust’s safety and efficiency. For more information and to contribute to the development of gymnasium_rs, you can visit the project repository on GitHub: https://github.com/AndrejOrsula/gymnasium_rs.

[dependencies]

gymnasium-rs = "0.1.0"

use gymnasium::{

space::SpaceSampleUniform, Env, GymnasiumResult, PythonEnv, PythonEnvConfig, RenderMode,

};

fn main() -> GymnasiumResult<()> {

let mut env = PythonEnv::<f32>::new(PythonEnvConfig {

env_id: "LunarLanderContinuous-v2".to_string(),

render_mode: RenderMode::Human,

seed: None,

})?;

let mut rng = rand::SeedableRng::from_entropy();

let _reset_return = env.reset();

for _ in 0..1000 {

let action = env.action_space().sample(&mut rng);

let step_return = env.step(action);

if step_return.terminated || step_return.truncated {

let _reset_return = env.reset();

}

}

env.close();

Ok(())

}

The provided Rust code utilizes the gymnasium crate to interact with the "LunarLanderContinuous-v2" environment, a continuous-action reinforcement learning (RL) task where an agent controls a lander to safely touch down on the lunar surface. The main function initializes a PythonEnv with human-rendering mode enabled, allowing visual observation of the environment's state. A random number generator (rng) is seeded using entropy to ensure diverse action sampling. After resetting the environment to obtain the initial state, the code enters a loop that runs for up to 1000 steps. In each iteration, it samples a random action uniformly from the environment's action space and applies this action using the env.step(action) method, which returns the new state, reward, and termination flags. If the episode terminates either by reaching a goal (terminated) or by truncation (truncated), the environment is reset to start a new episode. After completing the loop, the environment is properly closed to release any associated resources. This example demonstrates a fundamental RL interaction loop in Rust, where random actions are taken without any learning strategy, serving as a baseline or a starting point for integrating more sophisticated RL algorithms.

20.3. Conceptual Abstractions in Gym and Gymnasium

Reinforcement learning thrives on the structured interplay between agents and environments. This interaction hinges on a standardized interface that abstracts the complexities of diverse tasks while enabling researchers and practitioners to focus on developing and testing algorithms. OpenAI Gym and its successor, Farama Gymnasium, exemplify this philosophy by providing a robust API for environment interaction. At its core, this API standardizes how agents perceive the environment (via observations), take actions, and receive feedback (rewards and next states). By offering a unified structure, these frameworks democratize RL research, making it accessible to both newcomers and experts while fostering interoperability across projects and tools.

The foundation of these abstractions lies in the concepts of observation and action spaces, which encapsulate the range of inputs and outputs for an agent. An observation space defines the structure and type of data an agent perceives from the environment, such as continuous sensor readings, images, or discrete state labels. Similarly, the action space specifies the set of permissible actions the agent can perform, ranging from discrete choices (e.g., "move left" or "move right") to continuous controls (e.g., "apply force of magnitude 3.5"). These spaces are mathematically defined using properties like dimensionality, bounds, and type (discrete or continuous). For example, in Gym, the Box class models continuous spaces with bounds, while the Discrete class represents a finite set of choices. These abstractions simplify the design of RL algorithms by clearly delineating the range of possible states and actions, reducing ambiguity, and ensuring consistency across environments.

Conceptually, the standardized API and abstractions in Gym and Gymnasium facilitate modularity and interoperability. The reset method initializes the environment and returns the first observation, while the step(action) method executes an action, returning a tuple consisting of the next observation, the received reward, a boolean flag indicating whether the episode has ended, and additional metadata. This simplicity allows RL algorithms to interact seamlessly with a wide range of environments without requiring environment-specific customizations. Moreover, the API supports modularity through wrappers, which extend functionality without altering the core environment. Wrappers can modify observations, rewards, or actions, enabling advanced features like reward shaping, frame stacking for image inputs, or action normalization. By decoupling algorithms from environment-specific details, these abstractions empower researchers to experiment and iterate efficiently.

Mathematically, the abstractions align with the Markov Decision Process (MDP) framework. For a given environment state $s$, an agent selects an action $a$ from the action space $A(s)$. The environment transitions to a new state $s'$ according to a transition probability $P(s' | s, a)$, and the agent receives a reward $r$ defined by the reward function $R(s, a)$. The observation space $O$ encapsulates the partial or full information about the state $s$ that the agent perceives. In Gym and Gymnasium, these components are abstracted as Python classes, making it easier to define, manipulate, and query the properties of the MDP.

For practical implementation, consider the example of creating a simple custom environment using Gym’s API. The environment will simulate a cart moving in a one-dimensional space, where the goal is to reach a target position. Following this, we’ll use Gymnasium wrappers to enhance functionality, such as normalizing rewards or augmenting observations.

The RobustCartPoleEnv is a sophisticated custom gym environment that simulates a cart's movement in a constrained space, designed to provide a challenging yet controlled problem for reinforcement learning algorithms. This environment models a cart that must navigate towards a predefined target position while managing its velocity and position within specified boundaries. Unlike traditional cart-pole environments that focus on balancing, this simulation emphasizes precise movement and strategic navigation, making it an ideal testbed for training agents to develop nuanced movement strategies through trial and error.

# Copy this Python code to Google Colab for testing....

import gym

from gym import spaces

import numpy as np

import warnings

class RobustCartPoleEnv(gym.Env):

"""

A more comprehensive and robust custom gym environment

simulating a cart moving towards a target.

Key Improvements:

- Enhanced state representation

- More complex reward function

- Configurable parameters

- Improved error handling

- Physical constraints

"""

def __init__(self,

min_position=-10.0,

max_position=10.0,

target_position=5.0,

max_steps=200,

noise_std=0.1):

"""

Initialize the environment with configurable parameters.

Args:

min_position (float): Minimum cart position

max_position (float): Maximum cart position

target_position (float): Target position to reach

max_steps (int): Maximum number of steps per episode

noise_std (float): Standard deviation of observation noise

"""

super(RobustCartPoleEnv, self).__init__()

# Validate input parameters

if min_position >= max_position:

raise ValueError("min_position must be less than max_position")

# Define observation and action spaces

self.min_position = min_position

self.max_position = max_position

self.target_position = target_position

self.max_steps = max_steps

self.noise_std = noise_std

# Observation space includes position and velocity

self.observation_space = spaces.Box(

low=np.array([min_position, -np.inf]),

high=np.array([max_position, np.inf]),

dtype=np.float32

)

# Discrete action space (left, stay, right)

self.action_space = spaces.Discrete(3)

# Tracking variables

self.current_step = 0

self.state = None

# Rendering setup

self.render_mode = None

def reset(self, seed=None, options=None):

"""

Reset the environment to a new initial state.

Returns:

np.ndarray: Initial observation

dict: Additional info

"""

super().reset(seed=seed)

# Random initial position and zero initial velocity

self.state = np.array([

self.np_random.uniform(self.min_position, self.max_position),

0.0 # Initial velocity

], dtype=np.float32)

self.current_step = 0

# Add optional observation noise

noisy_state = self.state + self.np_random.normal(0, self.noise_std, size=2)

return noisy_state, {}

def step(self, action):

"""

Execute one time step in the environment.

Args:

action (int): Action to take (0: left, 1: stay, 2: right)

Returns:

tuple: (observation, reward, terminated, truncated, info)

"""

if not self.action_space.contains(action):

warnings.warn(f"Invalid action {action}. Defaulting to 'stay'.")

action = 1

# Update position based on action

velocity_change = {

0: -1.0, # Move left

1: 0.0, # Stay

2: 1.0 # Move right

}[action]

# Update state with simple physics model

new_position = np.clip(

self.state[0] + velocity_change,

self.min_position,

self.max_position

)

new_velocity = new_position - self.state[0]

self.state = np.array([new_position, new_velocity], dtype=np.float32)

# Compute reward with multiple components

distance_to_target = abs(new_position - self.target_position)

proximity_reward = 1.0 / (1 + distance_to_target)

velocity_penalty = abs(new_velocity) * 0.1

reward = proximity_reward - velocity_penalty

# Check termination conditions

terminated = distance_to_target < 0.1

truncated = self.current_step >= self.max_steps

self.current_step += 1

# Add noise to observation

noisy_state = self.state + self.np_random.normal(0, self.noise_std, size=2)

return noisy_state, reward, terminated, truncated, {

'distance_to_target': distance_to_target,

'velocity': new_velocity

}

def render(self, mode='human'):

"""

Render the current environment state.

Args:

mode (str): Rendering mode

"""

if mode == 'human':

print(f"Cart Position: {self.state[0]:.2f}, "

f"Velocity: {self.state[1]:.2f}, "

f"Target: {self.target_position:.2f}")

else:

warnings.warn(f"Render mode {mode} not supported.")

def close(self):

"""

Clean up the environment.

"""

pass

def test_robust_cart_env():

"""

Demonstrate the usage of the RobustCartPoleEnv.

"""

env = RobustCartPoleEnv()

# Test reset

state, _ = env.reset()

print("Initial State:", state)

# Test multiple steps

for step in range(20):

# Randomly sample an action

action = env.action_space.sample()

# Take a step

next_state, reward, terminated, truncated, info = env.step(action)

# Render current state

env.render()

# Check if episode is over

if terminated or truncated:

print(f"Episode finished after {step+1} steps")

break

if __name__ == "__main__":

test_robust_cart_env()

The environment operates by allowing an agent to take discrete actions (move left, stay, or move right) that influence the cart's position and velocity. With each action, the cart's state updates based on a simple physics model that clips its movement within predefined minimum and maximum position bounds. The agent receives a reward function that balances proximity to the target and penalizes excessive velocity, creating a complex optimization problem. The environment introduces realistic elements like observation noise and tracks both position and velocity, requiring the learning agent to develop robust navigation strategies that account for imperfect sensing and movement constraints.

OpenAI Gym is a pioneering toolkit in reinforcement learning that provides a standardized interface for developing, comparing, and benchmarking learning algorithms across diverse problem domains. By defining a consistent API with methods like reset(), step(), and standardized observation_space and action_space attributes, Gym enables researchers and developers to apply identical learning algorithms to multiple environments. This abstraction allows machine learning practitioners to focus on algorithm development rather than environment-specific implementation details, promoting reproducibility and accelerating research in areas like robotics, game playing, and autonomous systems.

Now lets learn Gymnasium framework. The provided Python code leverages the Gymnasium to create and interact with an enhanced version of the classic CartPole environment, named RobustCartPoleEnv. This custom environment extends Gymnasium’s Env class to offer comprehensive state and reward modeling, incorporating continuous action spaces and explicitly defined observation boundaries. By integrating multiple advanced wrappers such as NormalizeReward, TimeLimit, RecordEpisodeStatistics, and ClipAction, the code enhances the base environment's functionality, enabling more sophisticated reward scaling, action constraints, episode termination handling, and performance tracking. Additionally, the implementation includes a demonstration of both single and vectorized environment interactions, showcasing how multiple instances of the environment can be managed concurrently using Gymnasium’s SyncVectorEnv. This setup not only provides a robust framework for reinforcement learning experiments but also ensures compatibility with existing Gymnasium-compatible tools and workflows.

# Copy and paste to Google colab for test ...

import gymnasium as gym

import numpy as np

from gymnasium import spaces

from gymnasium.wrappers import (

NormalizeReward,

TimeLimit,

RecordEpisodeStatistics,

ClipAction

)

from gymnasium.vector import SyncVectorEnv

class RobustCartPoleEnv(gym.Env):

"""

Enhanced Gymnasium-compatible cart environment

with comprehensive state and reward modeling.

"""

def __init__(

self,

min_position=-10.0,

max_position=10.0,

target_position=5.0,

max_steps=200

):

super().__init__()

# Ensure float32 typing for spaces

min_position = np.float32(min_position)

max_position = np.float32(max_position)

# Observation and action spaces with explicit float32

self.observation_space = spaces.Box(

low=np.array([min_position, np.float32(-np.inf)], dtype=np.float32),

high=np.array([max_position, np.float32(np.inf)], dtype=np.float32),

dtype=np.float32

)

# Change action space to Box for wrapper compatibility

self.action_space = spaces.Box(

low=np.float32(-1.0),

high=np.float32(1.0),

shape=(1,),

dtype=np.float32

)

# Environment parameters

self.min_position = min_position

self.max_position = max_position

self.target_position = np.float32(target_position)

self.max_steps = max_steps

# State tracking

self.state = None

self.steps = 0

def reset(self, seed=None, options=None):

"""

Reset the environment with optional seed and options.

Returns:

observation (np.ndarray): Initial state

info (dict): Additional information

"""

super().reset(seed=seed)

# Random initial state with float32

self.state = np.array([

self.np_random.uniform(self.min_position, self.max_position),

np.float32(0.0) # Initial velocity

], dtype=np.float32)

self.steps = 0

return self.state, {}

def step(self, action):

"""

Execute one timestep in the environment.

Returns:

observation, reward, terminated, truncated, info

"""

# Convert continuous action to discrete-like movement

action = np.clip(action[0], -1, 1)

velocity_change = np.float32(action)

# Update position based on action

new_position = np.clip(

self.state[0] + velocity_change,

self.min_position,

self.max_position

)

new_velocity = new_position - self.state[0]

self.state = np.array([new_position, new_velocity], dtype=np.float32)

self.steps += 1

# Compute reward

distance_to_target = abs(new_position - self.target_position)

reward = np.float32(1 / (1 + distance_to_target))

# Check termination conditions

terminated = distance_to_target < 0.1

truncated = self.steps >= self.max_steps

return self.state, reward, terminated, truncated, {}

def create_env_with_wrappers():

"""

Create a Gymnasium environment with multiple advanced wrappers.

Returns:

gym.Env: Fully wrapped environment

"""

# Create base environment

base_env = RobustCartPoleEnv()

# Apply multiple wrappers

wrapped_env = gym.wrappers.RecordEpisodeStatistics(

gym.wrappers.TimeLimit(

gym.wrappers.NormalizeReward(

gym.wrappers.ClipAction(base_env)

),

max_episode_steps=50

)

)

return wrapped_env

def demonstrate_env_interaction():

"""

Demonstrate advanced environment interaction techniques.

"""

# Create environment

env = create_env_with_wrappers()

# Reset environment

state, info = env.reset()

print("Initial State (Wrapped):", state)

# Single environment interaction

for _ in range(20):

# Sample random action (now continuous)

action = env.action_space.sample()

# Step through environment

state, reward, terminated, truncated, info = env.step(action)

print(f"State: {state}, Normalized Reward: {reward}")

# Check episode termination

if terminated or truncated:

print("Episode finished!")

break

# Demonstrate vector environment

vector_env = SyncVectorEnv([create_env_with_wrappers] * 3)

# Batch environment interaction

states, infos = vector_env.reset()

print("\nVector Environment States:", states)

# Batch action sampling and stepping

actions = vector_env.action_space.sample()

next_states, rewards, terminated, truncated, infos = vector_env.step(actions)

print("Vector Environment Next States:", next_states)

if __name__ == "__main__":

demonstrate_env_interaction()

The code begins by defining the RobustCartPoleEnv class, which inherits from Gymnasium’s Env class, and initializes the environment with specific parameters such as position limits, target position, and maximum steps per episode. The reset method initializes the environment’s state with a random position within the defined range and zero velocity, preparing it for a new episode. The step method processes continuous actions by clipping them to a valid range, updating the cart’s position and velocity based on the action, and calculating the reward as an inverse function of the distance to the target position. It also checks for termination conditions, either by achieving proximity to the target or exceeding the maximum number of steps. The create_env_with_wrappers function applies a series of wrappers to the base environment, enhancing its capabilities by normalizing rewards, enforcing action clipping, limiting episode duration, and recording episode statistics. The demonstrate_env_interaction function showcases how to interact with both single and multiple wrapped environments, performing actions, stepping through the environment, and handling episode terminations, thereby illustrating the practical application of the enhanced environment in reinforcement learning workflows.

Compared to the original OpenAI Gym framework, Gymnasium offers a more maintained and actively developed alternative, ensuring better compatibility and extended features for modern reinforcement learning applications. While Gym provides a foundational API for RL environments, Gymnasium builds upon this by introducing additional utilities and wrappers that facilitate more complex and nuanced interactions, such as advanced reward normalization and action clipping mechanisms demonstrated in the provided code. Furthermore, Gymnasium’s support for vectorized environments through tools like SyncVectorEnv allows for more efficient parallel processing and scalability, which is essential for training more sophisticated RL agents. The compatibility wrapper in Gymnasium also ensures that legacy Gym environments remain usable, providing a seamless transition for projects migrating from Gym to Gymnasium. Overall, Gymnasium enhances the usability, flexibility, and performance of the Gym framework, making it a more robust choice for contemporary reinforcement learning research and development.

For a similar example in Rust, the RobustCartPoleEnv implementation is a sophisticated custom gym environment designed to simulate a cart's movement towards a target position. It leverages Rust's powerful type system and performance characteristics, providing a robust alternative to the Python implementation while maintaining the core principles of the original environment. The implementation utilizes the gym-rs crate, ndarray for numerical operations, and the rand crate for stochastic behaviors, creating a flexible and configurable learning environment for reinforcement learning algorithms.

use gym_rs::{

core::{Env, ActionSpace, ObservationSpace},

spaces::{BoxSpace, DiscreteSpace},

};

use ndarray::{Array1, ArrayView1};

use rand::prelude::*;

use std::fmt;

pub struct RobustCartPoleEnv {

min_position: f64,

max_position: f64,

target_position: f64,

max_steps: usize,

noise_std: f64,

current_step: usize,

state: Array1<f64>,

rng: ThreadRng,

}

impl RobustCartPoleEnv {

pub fn new(

min_position: f64,

max_position: f64,

target_position: f64,

max_steps: usize,

noise_std: f64,

) -> Result<Self, String> {

if min_position >= max_position {

return Err("min_position must be less than max_position".to_string());

}

Ok(Self {

min_position,

max_position,

target_position,

max_steps,

noise_std,

current_step: 0,

state: Array1::zeros(2),

rng: thread_rng(),

})

}

}

impl Env for RobustCartPoleEnv {

type Action = usize;

type Observation = Array1<f64>;

fn observation_space(&self) -> ObservationSpace<Self::Observation> {

ObservationSpace::Box(BoxSpace::new(

Array1::from_vec(vec![self.min_position, f64::NEG_INFINITY]),

Array1::from_vec(vec![self.max_position, f64::INFINITY]),

))

}

fn action_space(&self) -> ActionSpace<Self::Action> {

ActionSpace::Discrete(DiscreteSpace::new(3))

}

fn reset(&mut self) -> Self::Observation {

// Random initial position and zero initial velocity

self.state[0] = self.rng.gen_range(self.min_position..=self.max_position);

self.state[1] = 0.0;

self.current_step = 0;

// Add noise to observation

let noise = Array1::from_vec(vec![

self.rng.normal(0.0, self.noise_std),

self.rng.normal(0.0, self.noise_std),

]);

self.state.clone() + &noise

}

fn step(&mut self, action: Self::Action) -> (Self::Observation, f64, bool, bool, Option<String>) {

// Validate action

let action = match action {

0 | 1 | 2 => action,

_ => {

println!("Invalid action {}. Defaulting to 'stay'.", action);

1

}

};

// Update position based on action

let velocity_change = match action {

0 => -1.0, // Move left

1 => 0.0, // Stay

2 => 1.0, // Move right

_ => unreachable!(),

};

// Update state with simple physics model

let new_position = self.state[0] + velocity_change;

let new_position = new_position.clamp(self.min_position, self.max_position);

let new_velocity = new_position - self.state[0];

self.state[0] = new_position;

self.state[1] = new_velocity;

// Compute reward

let distance_to_target = (new_position - self.target_position).abs();

let proximity_reward = 1.0 / (1.0 + distance_to_target);

let velocity_penalty = new_velocity.abs() * 0.1;

let reward = proximity_reward - velocity_penalty;

// Check termination conditions

let terminated = distance_to_target < 0.1;

let truncated = self.current_step >= self.max_steps;

self.current_step += 1;

// Add noise to observation

let noise = Array1::from_vec(vec![

self.rng.normal(0.0, self.noise_std),

self.rng.normal(0.0, self.noise_std),

]);

let noisy_state = self.state.clone() + &noise;

(noisy_state, reward, terminated, truncated, None)

}

}

impl fmt::Display for RobustCartPoleEnv {

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

write!(

f,

"Cart Position: {:.2}, Velocity: {:.2}, Target: {:.2}",

self.state[0], self.state[1], self.target_position

)

}

}

// Example usage function

fn test_robust_cart_env() {

let mut env = RobustCartPoleEnv::new(

-10.0, // min_position

10.0, // max_position

5.0, // target_position

200, // max_steps

0.1 // noise_std

).expect("Failed to create environment");

// Test reset

let initial_state = env.reset();

println!("Initial State: {:?}", initial_state);

// Test multiple steps

for step in 0..20 {

// Randomly sample an action using ThreadRng

let action = thread_rng().gen_range(0..3);

// Take a step

let (next_state, reward, terminated, truncated, _) = env.step(action);

// Print current state

println!("Step {}: {}, Reward: {:.2}", step, env, reward);

// Check if episode is over

if terminated || truncated {

println!("Episode finished after {} steps", step + 1);

break;

}

}

}

fn main() {

test_robust_cart_env();

}

This Rust environment extends the basic gym environment interface by introducing enhanced state representation, a complex reward function, and configurable parameters. It features a discrete action space with three possible actions (move left, stay, move right), an observation space representing cart position and velocity, and a sophisticated reward mechanism that balances proximity to the target and velocity penalties. The implementation includes robust error handling, noise injection for observation uncertainty, and a flexible configuration system that allows researchers to fine-tune environment parameters such as position limits, target position, maximum steps, and observation noise.

While the Rust implementation closely mirrors the Python gym environment, there are subtle differences in implementation that may require careful consideration when transitioning between platforms. The gym-rs crate attempts to provide a similar interface to Python's gym, but developers should anticipate potential variations in random number generation, type handling, and specific trait implementations. For seamless cross-language reinforcement learning experiments, additional wrapper code or careful parameter matching might be necessary to ensure consistent behavior between Python and Rust environments.

Now lets see other implementation using Rust’s gymnasium crate. The gymnasium-rs implementation of the RobustCartPoleEnv is a sophisticated reinforcement learning environment that simulates a cart moving along a one-dimensional space with the goal of reaching a target position. Unlike traditional CartPole environments, this version introduces more nuanced state management, continuous action spaces, and flexible reward mechanisms. It demonstrates Rust's capabilities in creating performant and type-safe reinforcement learning simulation environments, leveraging the gymnasium-rs crate to provide a similar interface to Python's Gymnasium library.

[dependencies]

gymnasium-rs = "0.1.0" # Use the latest version

ndarray = "0.15.6"

rand = "0.8.5"

use gymnasium_rs::{

Env,

EnvResult,

Space,

BoxSpace,

ActionSpace,

ResetMode,

StepResult,

};

use ndarray::{Array1, ArrayView1};

use rand::Rng;

use std::sync::Arc;

/// A robust CartPole environment with enhanced state and reward modeling

pub struct RobustCartPoleEnv {

min_position: f32,

max_position: f32,

target_position: f32,

max_steps: usize,

current_state: Array1<f32>,

steps: usize,

rng: rand::rngs::ThreadRng,

}

impl RobustCartPoleEnv {

pub fn new(

min_position: f32,

max_position: f32,

target_position: f32,

max_steps: usize

) -> Self {

Self {

min_position,

max_position,

target_position,

max_steps,

current_state: Array1::zeros(2),

steps: 0,

rng: rand::thread_rng(),

}

}

}

impl Env for RobustCartPoleEnv {

fn observation_space(&self) -> Space {

Space::Box(BoxSpace::new(

Array1::from_vec(vec![self.min_position, f32::NEG_INFINITY]),

Array1::from_vec(vec![self.max_position, f32::INFINITY]),

))

}

fn action_space(&self) -> ActionSpace {

ActionSpace::Box(BoxSpace::new(

Array1::from_vec(vec![-1.0]),

Array1::from_vec(vec![1.0]),

))

}

fn reset(&mut self, _mode: Option<ResetMode>) -> EnvResult {

// Random initial position within defined range

let initial_position = self.rng.gen_range(self.min_position..=self.max_position);

self.current_state = Array1::from_vec(vec![

initial_position,

0.0 // Initial velocity

]);

self.steps = 0;

EnvResult {

observation: self.current_state.to_owned(),

info: Default::default(),

}

}

fn step(&mut self, action: ArrayView1<f32>) -> StepResult {

// Clip and apply action